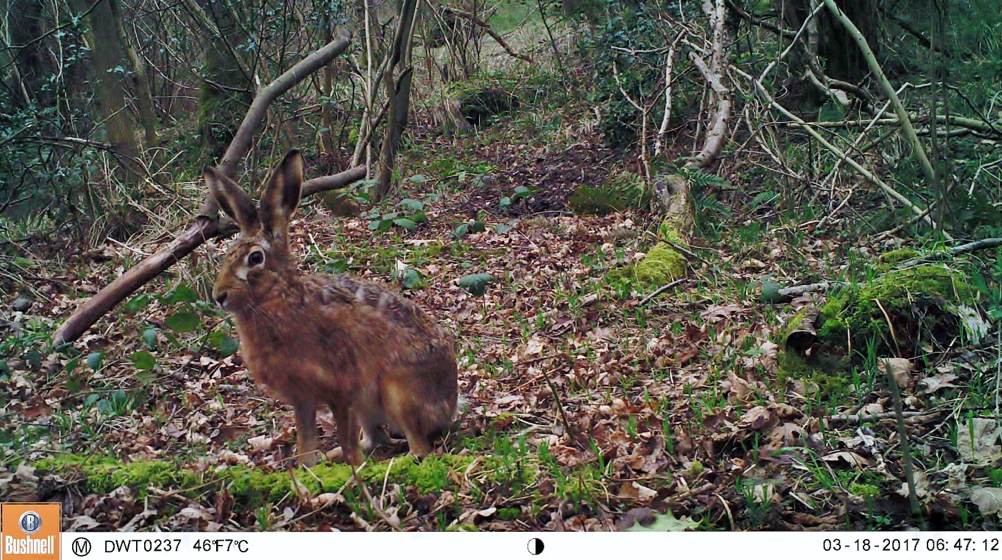

To conserve biodiversity effectively, we need to know where and in what abundance it occurs. Breeding bird surveys, which happen in many countries every year, are a great example of how high-quality biodiversity data can underpin science and policy. In contrast to birds, however, many mammal species are elusive and surprisingly poorly documented. Motion-sensing camera traps can change this, owing to the relative ease with which they can be set up across a wide area to observe and document mammals in a non-intrusive way. As a result, camera trapping is a highly active focus of research in ecology and conservation.

A major challenge for camera trapping is dealing with the sheer volume of data that can be produced. Even modest studies can rapidly generate data sets numbering tens or hundreds of thousands of images. Someone must look at each photo and record the animals captured in it. This classification process can be a huge drain on a researcher’s time and can significantly delay the ecological insights that camera trapping can provide.

In recent years, many researchers have turned to online crowdsourcing platforms where anyone who is interested can help with data processing, which includes classifying camera trap photos. For example, the highly successful Snapshot Serengeti project attracted tens of thousands of participants to classify more than a million camera trap photos. An important trick of the trade is to ask multiple participants to classify each photo. This way, researchers can aggregate those “votes” to calculate a consensus classification. Once a consensus is achieved for a photo, it can be “retired” (i.e., no longer shown to visitors) so that users can look at other images in the dataset.

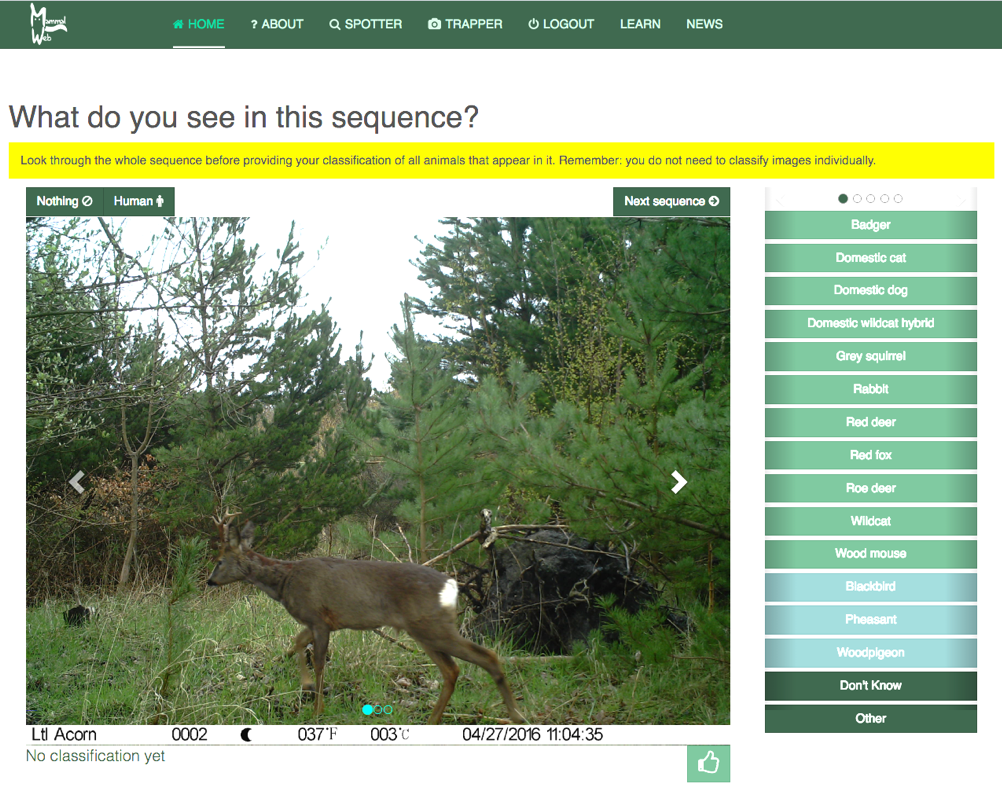

Motivated by the need to find better ways to monitor mammals in the United Kingdom, CEG staff collaborated with staff at Durham Wildlife Trust, plus many volunteers from around the country, to start MammalWeb, a citizen science project for monitoring wild mammals in north-east England. The project is unusual, in that MammalWeb citizen scientists can participate in one or both of two ways: by being a “Trapper” who sets up camera traps and uploads photos and associated data to our web platform; and by being a “Spotter” who logs in to help classify those photos (Fig. 1). One challenge for MammalWeb is that we have a much smaller group of Spotters (hundreds of users) than big, international projects like Snapshot Serengeti (tens of thousands of users). Therefore, we wanted to see if there is a way to arrive at those consensus classifications in an even more economical way, so that user effort can be focused on examining photos requiring more scrutiny. If we can do this, crowd-sourced camera trapping projects big and small can all benefit.

What all this means is that, when crowdsourcing the classification of camera trap photos and calculating consensus classifications, it may be helpful to factor in (1) differences in detectability between species, and (2) the relative influence of different types of incorrect classifications (where species have been missed versus where they have been misclassified). Together, these solutions can better focus user classification efforts on those photos requiring more scrutiny.

As projects like MammalWeb, Snapshot Serengeti and eMammal gather a large body of classified camera trap photos, they can be used as training data to aid machine learning algorithms to automatically classify wildlife photos. The first steps look very promising, emphasising how critical it is for researchers to share their data and results so that we can build on each other’s progress to address the need for large scale monitoring in this time of rapid ecological change.

More generally, the MammalWeb project has also demonstrated that citizen science is not limited to scientists crowdsourcing, or “outsourcing”, their work to volunteers. MammalWeb citizen scientists have not only been instrumental in setting up camera traps to observe wild mammals, but have also taken the initiative and started their own wildlife surveys. Some use the data they collect to inform public planning and engage policy makers, while others develop and deliver camera trapping workshops to other wildlife groups. Can citizen science camera trapping be as successful as other citizen-initiated remote sensing projects such as aerial mapping?

RSS Feed

RSS Feed